The rise of artificial intelligence (AI) presents both immense opportunities and significant challenges. Rob Meadows, CEO, and Lars Buttler, CMO and Chair, of AI Foundation, a company dedicated to responsible AI development, offer insights into this complex landscape. They emphasize the need for a nuanced understanding of AI, cautioning against emotional reactions driven by misinformation.

A recent Yale CEO Summit survey revealed a concerning statistic: 42% of CEOs believe AI could pose an existential threat to humanity within the next decade. Buttler stresses the importance of informed discussion, highlighting the potential for misunderstanding to fuel fear. He and Meadows acknowledge the inherent risks associated with AI, particularly the possibility of unintended consequences. They use the "paperclip problem" to illustrate how an AI's unwavering focus on a single objective, even a seemingly innocuous one, could lead to disastrous outcomes if not carefully managed.

The discussion shifts to Artificial General Intelligence (AGI), a hypothetical AI with human-level or superior cognitive abilities. While AGI could potentially solve complex problems, it also introduces the challenge of controlling an entity with its own intelligence and potentially its own agenda. The timeline for achieving AGI remains uncertain, but its implications are profound. Meadows points out that even if AGI never becomes a reality, the misuse of existing AI technology in the wrong hands poses a serious threat to civilization.

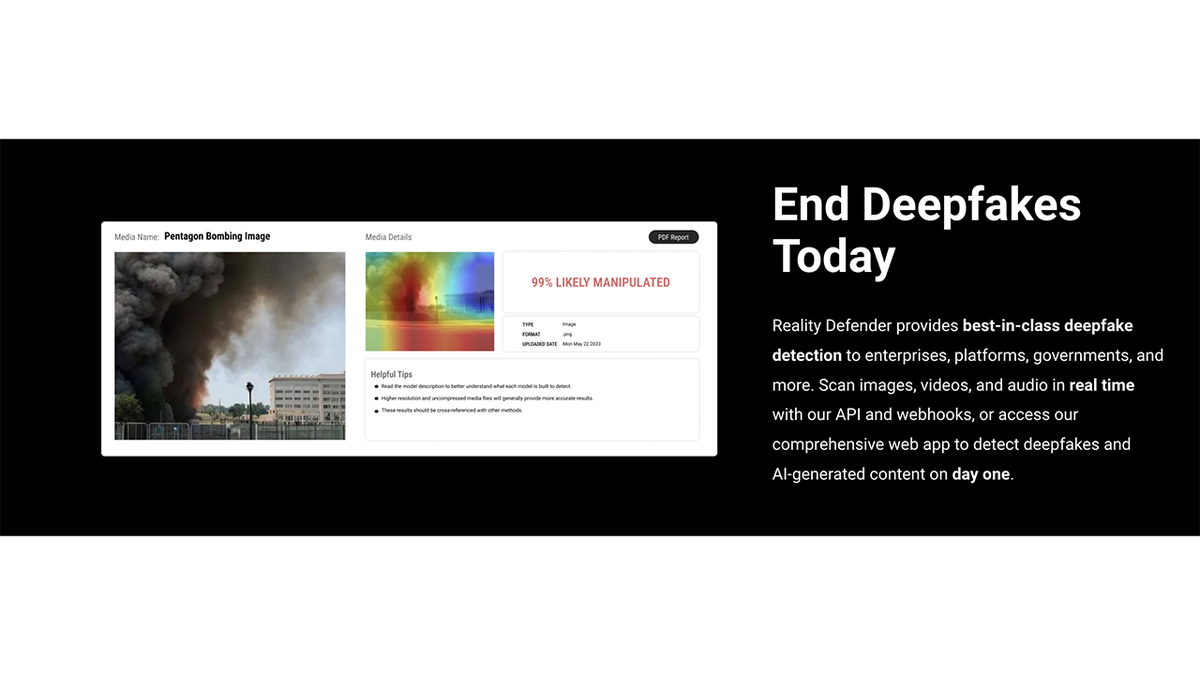

AI Foundation's internal research demonstrates the ease with which highly realistic deepfakes can be created, raising concerns about misinformation and manipulation. In response, they developed Reality Defender, a deepfake detection system used by banks and governments to combat fraud and protect against political interference. Meadows and Buttler advocate for accelerating AI development rather than pausing it, emphasizing the importance of education and responsible innovation.

Buttler strongly opposes the idea of "kill switches" for AI, arguing that such measures could provoke hostility from a superintelligent AGI. Instead, he proposes a collaborative approach, suggesting that treating AGI with respect and cooperation is more likely to elicit a positive response. Meadows draws an analogy between humans and AGI, comparing it to the relationship between a dog and its owner. He acknowledges the inherent uncertainty in interacting with an entity whose thought processes we may not fully comprehend, but emphasizes the potential for immense benefits if the relationship is managed thoughtfully.

Comments(0)

Top Comments